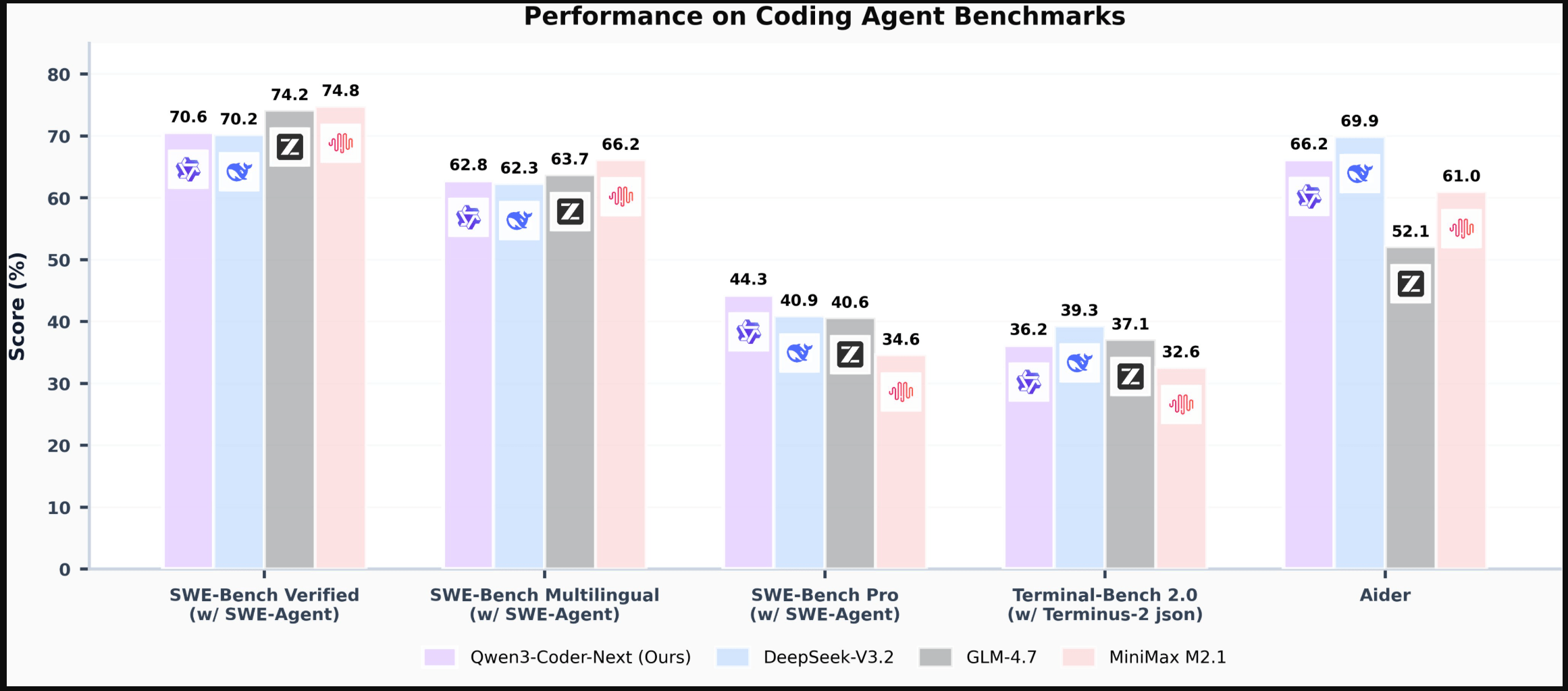

According to Alibaba, the model matches or outperforms several significantly larger open-source models across a range of agent-based evaluations, despite its low active memory footprint. On SWE-Bench Verified, it achieves over 70% when used with the SWE-Agent framework.

Qwen3-Coder-Next supports a 256,000-token context length and can be integrated into multiple development environments, including Claude Code, Qwen Code, Qoder, Kilo, Trae, and Cline. For local deployment, it is supported by tools such as Ollama, LMStudio, MLX-LM, llama.cpp, and KTransformers.

The model is available on Hugging Face and ModelScope under the Apache-2.0 license. Additional details can be found in Alibaba’s blog post and the technical report published on GitHub.

ES

ES  EN

EN