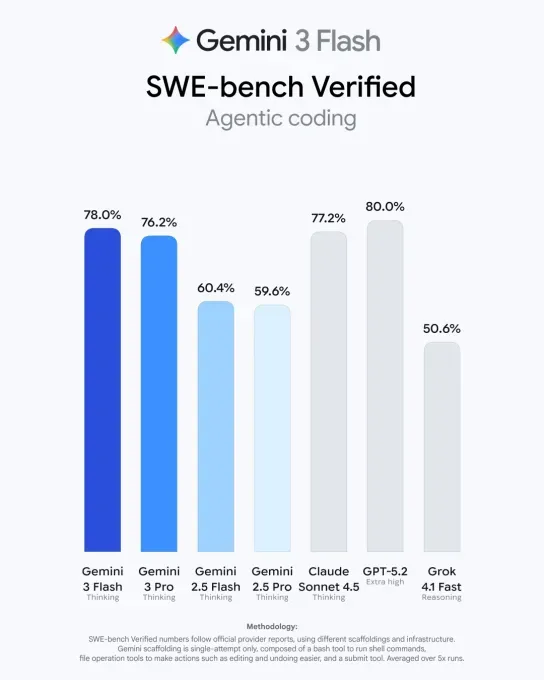

According to internal benchmarks, the new lightweight model outperforms its predecessor, Gemini 2.5 Flash, and in several tests comes close to the performance of flagship systems such as Gemini 3 Pro and GPT-5.2.

In the Humanity’s Last Exam benchmark, designed to assess broad cross-domain knowledge, Gemini 3 Flash scored 33.7%. For comparison, Gemini 3 Pro achieved 37.5%, Gemini 2.5 Flash reached 11%, and GPT-5.2 scored 34.5%.

The model showed particularly strong results in MMMU-Pro, a benchmark that evaluates multimodal understanding and reasoning. There, Gemini 3 Flash achieved 81.2%, outperforming all competing models. In SWE-bench, which measures software engineering capabilities, Gemini 2.5 Flash recorded 78%, trailing GPT-5.2. Google emphasized that Gemini 3 Flash is especially well suited for video analysis, data extraction, and visual question-answering tasks.

Google has now fully replaced Gemini 2.5 Flash with Gemini 3 Flash as the default model in the Gemini app. Users can still manually select Gemini Pro for more advanced tasks in mathematics and programming.

The company says the new model demonstrates stronger multimodal comprehension and produces more context-aware responses. For example, users can upload a short sports video—such as a pickleball clip—and ask the assistant for practical tips. Gemini 3 Flash also better interprets user intent and can generate richer answers using interactive elements like images and tables.

Pricing and efficiency

Gemini 3 Flash is priced at $0.50 per million input tokens and $3 per million output tokens, compared with $0.30 and $2.50 respectively for Gemini 2.5 Flash. However, Google notes that the new model uses roughly 30% fewer tokens on average for reasoning-heavy tasks compared with Gemini 2.5 Pro, which can make it more cost-effective in real-world usage despite higher nominal rates.

Opal arrives in Gemini

Google has also integrated Opal, its tool for “vibe coding” and building mini-applications, into the Gemini ecosystem. Opal is part of Gems, customizable versions of Gemini introduced in 2024 for specific use cases such as learning assistance, brainstorming, career guidance, or programming support.

With Opal, users can create simple applications or combine existing Gems by describing the desired functionality in natural language. A visual editor then displays the steps required to assemble the tool, lowering the barrier to experimentation and rapid prototyping.

Rising competition with OpenAI

Google’s progress is intensifying competition with OpenAI. The company’s AI momentum has grown following the launch of Gemini 3, which Google previously described as its most capable model to date, and the release of its updated image-generation tool Nano Banana Pro.

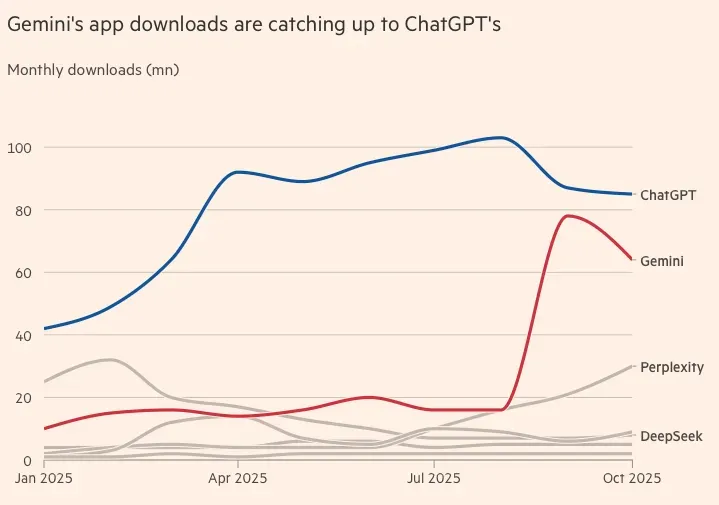

Gemini’s adoption is accelerating and is increasingly approaching ChatGPT in terms of popularity. While OpenAI’s chatbot still dominates the market—accounting for roughly 50% of global mobile AI app downloads and 55% of monthly active users—Gemini is growing faster in downloads, user acquisition, and time spent in the app.

This trend suggests that the gap between Gemini and ChatGPT may continue to narrow, a possibility that has reportedly raised concerns inside OpenAI, as reflected in its recently leaked internal “red code” memorandum.

ES

ES  EN

EN