Learning new programming skills with the help of AI can significantly reduce knowledge retention, according to a new study by Anthropic, which warns against overly aggressive AI integration in the workplace.

Software developers who relied on AI assistance while learning a new programming library developed weaker understanding of the underlying concepts, the study found.

For the experiment, researchers recruited 52 mostly junior software developers who had at least one year of regular Python experience and prior exposure to AI assistants, but were unfamiliar with the Trio library. Participants were randomly split into two groups: one group had access to an AI assistant based on GPT-4o, while the control group could only use documentation and web search. Both groups were asked to complete two Trio-based programming tasks as quickly as possible.

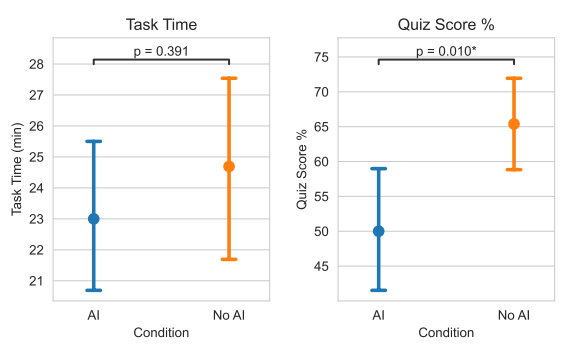

Afterward, all participants took a conceptual knowledge test. The key result: developers with AI access scored 17% lower on the test than those without AI assistance. At the same time, AI usage did not lead to a statistically significant reduction in task completion time.

“Our results suggest that aggressive AI integration in the workplace, particularly in software development, comes with trade-offs,” write researchers Judy Hanwen Shen and Alex Tamkin.

Six usage patterns, very different learning outcomes

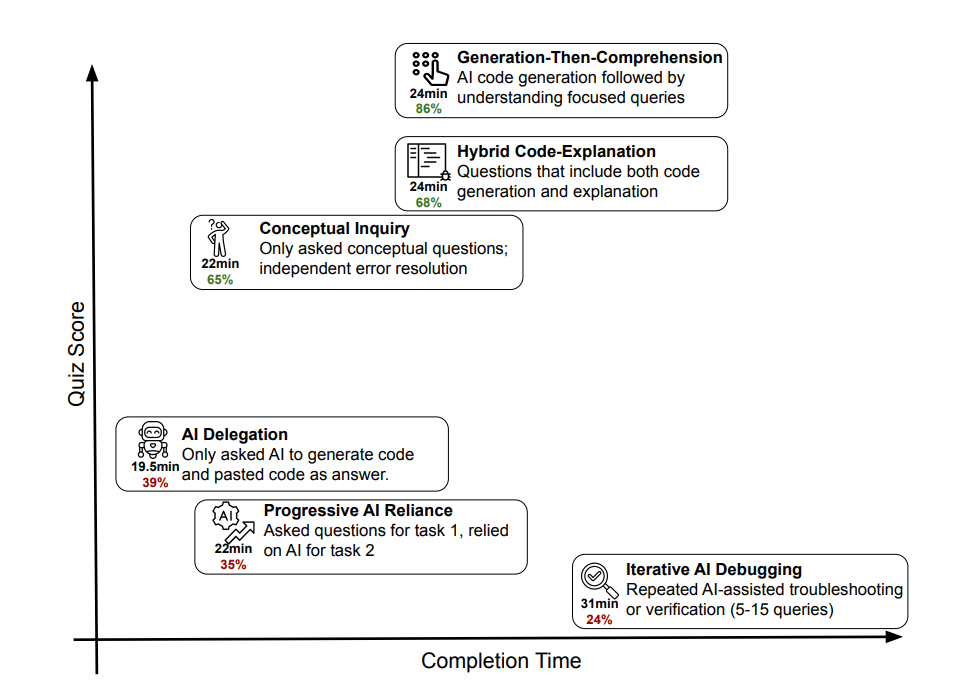

How developers interacted with the AI had a major impact on learning. A qualitative analysis of screen recordings from 51 participants revealed six distinct usage patterns. Three of them led to particularly poor learning outcomes, with quiz scores between 24% and 39%.

Participants who fully delegated the task to the AI finished fastest but scored only 39% on the test. Similarly weak results were seen among those who started coding themselves but increasingly relied on AI-generated solutions. The worst outcomes occurred when participants repeatedly used AI for debugging without understanding the errors.

By contrast, three other patterns preserved learning outcomes, with quiz scores ranging from 65% to 86%. The most effective strategy was to let the AI generate code and then ask targeted questions to understand it. Also effective were requesting explanations alongside code generation or using the AI only for conceptual questions.

Too much chatting hurts productivity

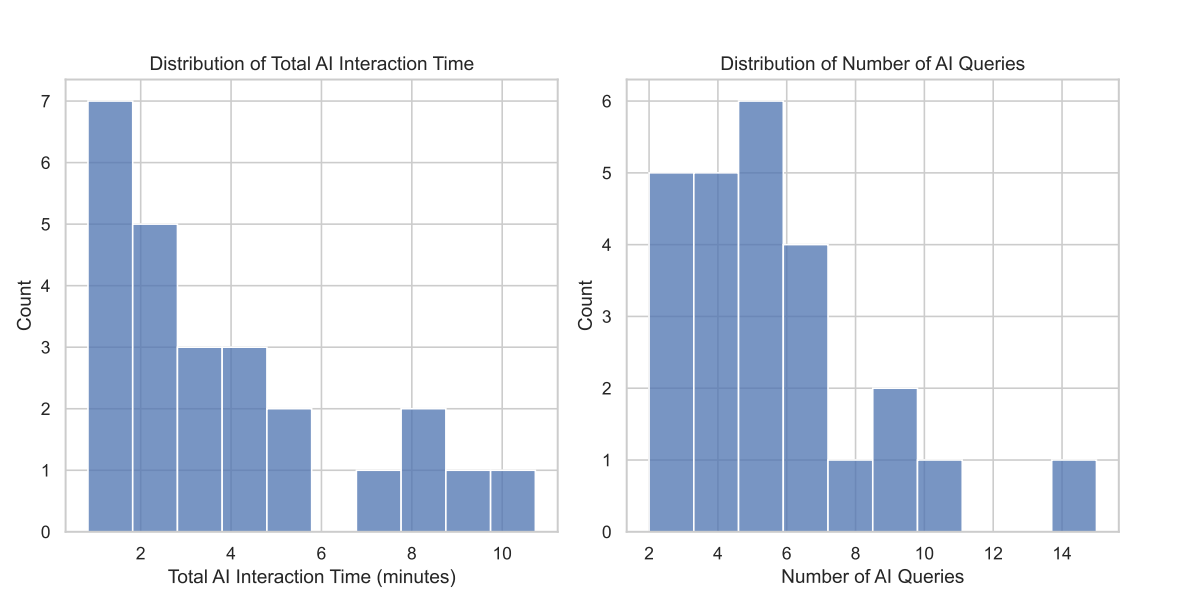

Unlike earlier studies on AI-assisted programming, this research found no clear productivity gains. AI users were not significantly faster overall. The qualitative analysis explains why: some participants spent up to 11 minutes just interacting with the AI, crafting prompts and follow-up questions.

Only about 20% of the AI group used the assistant exclusively for code generation. That subgroup was faster than the control group—but also showed the worst learning outcomes. The remaining participants asked for explanations or spent time understanding AI-generated code, which offset any speed gains. The researchers suggest AI boosts productivity mainly for repetitive or already familiar tasks, not for learning something new.

This helps explain the apparent contradiction with earlier studies that reported large productivity gains: those studies measured performance on tasks where participants already had the necessary skills. This study focused on learning.

AI reduces learning from mistakes

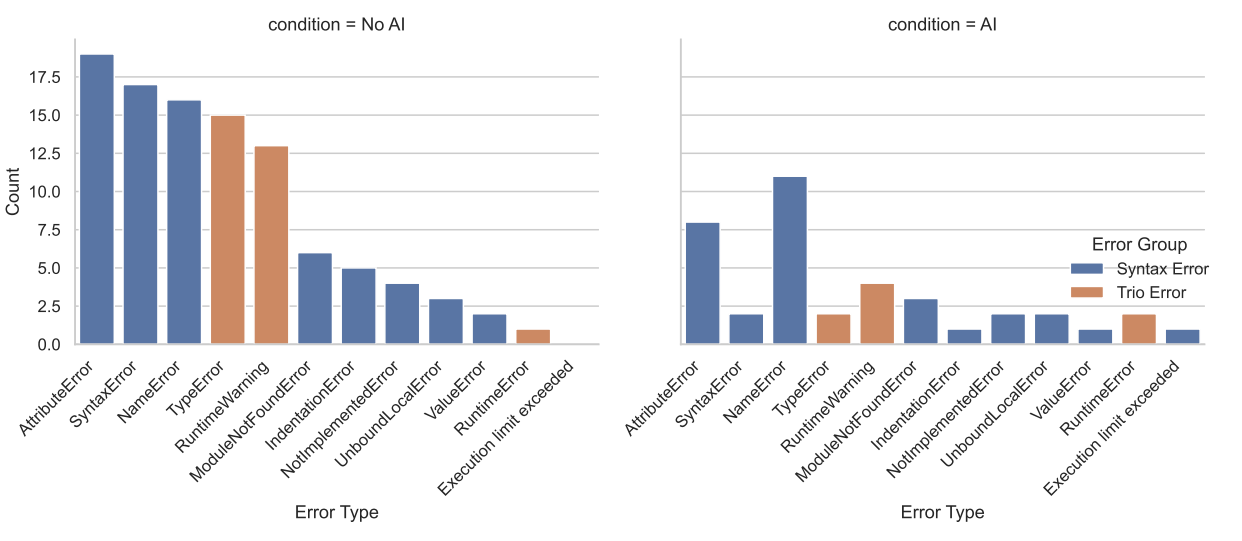

The control group without AI made more errors and had to deal with them about three times as often as the AI group. According to the researchers, these mistakes forced deeper critical thinking. Struggling with problems—even painfully—appears to play an important role in building expertise.

The biggest gaps in test performance appeared in debugging-related questions. The control group encountered more Trio-specific issues, such as runtime warnings caused by unexpected coroutines, which likely strengthened their understanding of core concepts.

Implications for the workplace

The findings raise concerns for safety-critical applications. If people are expected to review and debug AI-generated code, they must retain strong skills themselves. Those skills may erode if AI use undermines learning.

“AI-assisted productivity is not a shortcut to competence,” the researchers conclude. Preserving learning requires cognitive effort: using AI for conceptual clarification or explanations can help, while blind delegation harms understanding.

The study examined only a one-hour task using a chat-based interface. More autonomous, agent-based systems such as Claude Code, which require even less human involvement, could amplify the negative effects on skill development. While not tested, the authors note it is plausible that similar effects occur in other knowledge-work domains, such as writing or planning.

Notably, Anthropic published results that could undermine its own business model. The company profits from AI assistants like Claude, yet its research team openly warns about their potential downsides—an unusual level of transparency in today’s tech industry.

ES

ES  EN

EN