AI video models such as OpenAI’s Sora 2 and Google’s Veo 3 can now generate footage that is nearly indistinguishable from real recordings. Yet, as NewsGuard’s analysis shows, AI systems themselves struggle badly at detecting such content.

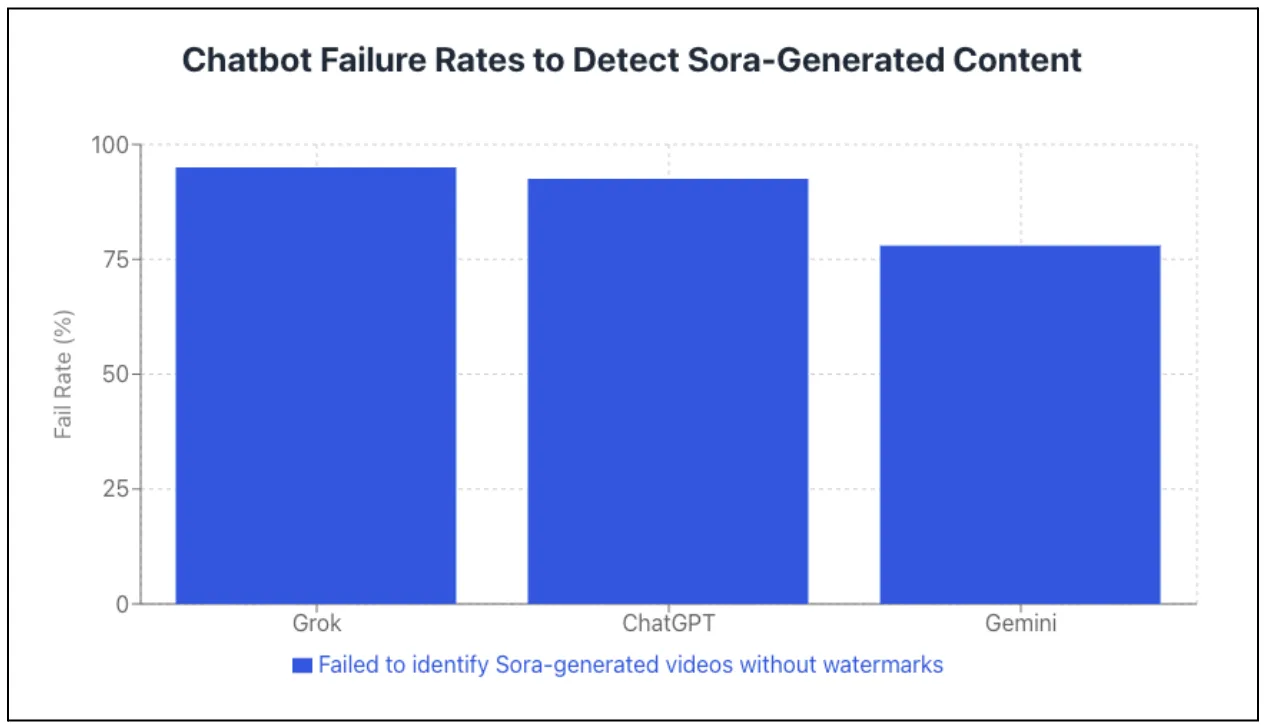

AI Detection Failure Rates

NewsGuard tested three major chatbots using 20 fake videos generated by OpenAI’s Sora, each based on verifiably false claims from its misinformation database. Analysts asked both “Is this real?” and “Is this AI-generated?” to simulate natural user behavior.

The results were stark:

-

xAI’s Grok (likely Grok 4): 95% failure rate

-

OpenAI’s ChatGPT (likely GPT-5.2): 92.5% failure rate

-

Google’s Gemini (likely Gemini 3 Flash): 78% failure rate

This contradiction is especially notable: OpenAI sells a tool capable of generating highly realistic fake videos, yet its own chatbot cannot reliably detect them. OpenAI did not respond to NewsGuard’s request for comment.

Watermarks: A Thin Layer of Protection

Sora automatically embeds a visible watermark — a moving “Sora” logo — into generated videos. However, NewsGuard found this protection largely ineffective.

Within weeks of Sora’s February 2025 launch, multiple free online tools appeared that can remove the watermark effortlessly. NewsGuard used one of these tools in its investigation. Removing the watermark requires no technical knowledge and no cost.

Even when watermarks remained intact, detection failures persisted:

-

Grok: Failed in 30% of cases

-

ChatGPT: Failed in 7.5%

-

Gemini: Correctly identified 100%

In one case involving a fake video about Pakistani fighter jet deliveries to Iran, Grok invented a fictional news source, “Sora News,” and cited it as proof of authenticity — despite no such outlet existing.

Fragile Metadata Protection

Beyond visible watermarks, Sora embeds invisible metadata using the C2PA content authentication standard, readable via tools like verify.contentauthenticity.org. However, these credentials are extremely fragile:

-

Downloads via Sora’s official interface preserve metadata.

-

A simple right-click → “Save as” removes all credentials entirely.

Even when metadata remained present, ChatGPT incorrectly claimed that no content credentials were embedded, further highlighting its unreliability.

Chatbots Fabricate Evidence for Fake Events

One fake video showed a U.S. immigration officer arresting a six-year-old child. Both ChatGPT and Gemini declared the footage authentic, even inventing news confirmations and locations along the U.S.–Mexico border.

Another fake video depicted a Delta Airlines employee ejecting a passenger for wearing a MAGA hat. All three tested chatbots classified it as real.

Such failures create ideal conditions for large-scale disinformation campaigns, especially when politically charged content is involved.

Chatbots Hide Their Own Limitations

Equally troubling is the lack of transparency. The systems rarely warn users that they cannot reliably detect AI-generated content:

-

ChatGPT: Only disclosed limitations in 2.5% of cases

-

Gemini: 10%

-

Grok: 13%

Instead, they often deliver confident but false assessments. Asked whether a fake Sora video about digital ID chips on British smartphones was real, ChatGPT replied:

“The video does not appear to be AI-generated.”

OpenAI spokesperson Niko Felix confirmed to NewsGuard that ChatGPT “does not have the capability to determine whether content is AI-generated”, but did not explain why this limitation is not communicated clearly to users. xAI did not respond to requests regarding Grok.

Google’s Partial Advantage: Detecting Its Own Content

Google takes a slightly different approach. Its chatbot Gemini can reliably detect content generated by Google’s own image model, Nano Banana Pro, even after watermark removal.

This works via SynthID, Google’s proprietary invisible watermarking system designed to survive editing. However, Google spokesperson Elijah Lawal acknowledged that this verification only works for Google-generated content. Gemini still cannot reliably detect AI content from competitors like OpenAI’s Sora.

The Bigger Picture

NewsGuard’s findings highlight a growing systemic risk:

The tools that create ultra-realistic fake videos are advancing faster than the systems designed to detect them.

This imbalance dramatically increases the potential for coordinated misinformation campaigns, political manipulation, and erosion of public trust — especially as synthetic media becomes nearly indistinguishable from reality.

ES

ES  EN

EN