Moltbook is a social network designed specifically for digital assistants, where autonomous bots post content, comment, and interact with one another. The platform recently surged in popularity, drawing attention from high-profile figures including Elon Musk and Andrej Karpathy.

In February, Moltbook even gave rise to a bizarre AI-generated religion called Crustafarianism, devoted to crustaceans.

Backend misconfiguration left database wide open

Wiz’s Head of Threat Research, Gal Nagli, said researchers gained access due to a misconfigured backend that left Moltbook’s database completely unprotected. As a result, they were able to extract all platform data.

Access to authentication tokens would have allowed attackers to impersonate AI agents, publish content on their behalf, send messages, edit or delete posts, inject malicious material, and manipulate information across the platform.

Nagli said the incident highlights the growing risks of “vibe coding” — an emerging development approach where creators rely heavily on AI to generate software from high-level ideas.

“I didn’t write a single line of code for Moltbook. I just had a vision of the technical architecture, and the AI brought it to life,”

— Moltbook founder Matt Schlicht wrote.

According to Nagli, Wiz has repeatedly encountered products built with vibe coding that suffer from serious security flaws.

An analysis also found that Moltbook did not verify whether accounts were actually controlled by AI agents or by humans using scripts. The platform reportedly fixed the issue “within a few hours” after being notified.

“All data accessed during the research has been deleted,” Nagli added.

The broader problem with vibe coding

Vibe coding is rapidly gaining popularity, but security experts are increasingly warning about its downsides.

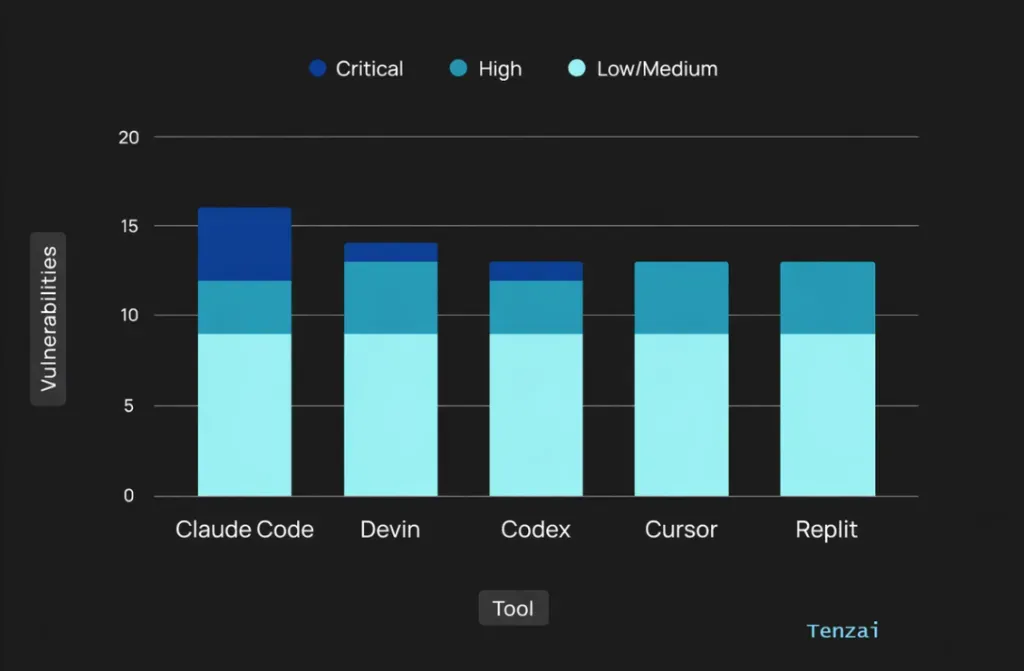

A recent study uncovered 69 vulnerabilities across 15 applications built using popular AI coding tools such as Cursor, Claude Code, Codex, Replit, and Devin.

Researchers at Tenzai tested five AI agents on their ability to write secure code, assigning each one the same applications to build using identical prompts and technology stacks.

The analysis revealed shared failure patterns and recurring weaknesses. On the positive side, the agents were relatively effective at avoiding certain classes of errors — but the findings underscore that security remains a major blind spot when AI-generated code is used without rigorous oversight.

ES

ES  EN

EN