A bioacoustic foundation model from Google DeepMind, trained largely on bird vocalizations, outperforms models specifically trained on whales when classifying underwater animal sounds. The explanation reaches as far as evolutionary biology.

Because visual contact underwater is often impossible, the behavior of whales and dolphins is frequently studied through their vocalizations. Developing reliable AI classifiers for underwater sounds, however, is difficult. Data collection requires expensive specialized equipment, and new sound types are sometimes not attributed to a species until decades after they are first recorded.

A team from Google DeepMind and Google Research now shows in a paper that a very different approach can work. Their bioacoustic foundation model, Perch 2.0, which was trained primarily on bird sounds, consistently outperforms all comparison models in whale song classification — including a Google model trained specifically on whales.

A bird model recognizes whales

The 101.8-million-parameter Perch 2.0 model was trained on more than 1.5 million animal sound recordings covering at least 14,500 species. The majority of these are birds, supplemented by insects, mammals, and amphibians. Underwater recordings are virtually absent from the training data. According to the paper, there are only about a dozen whale recordings, most of them captured above water using mobile phones.

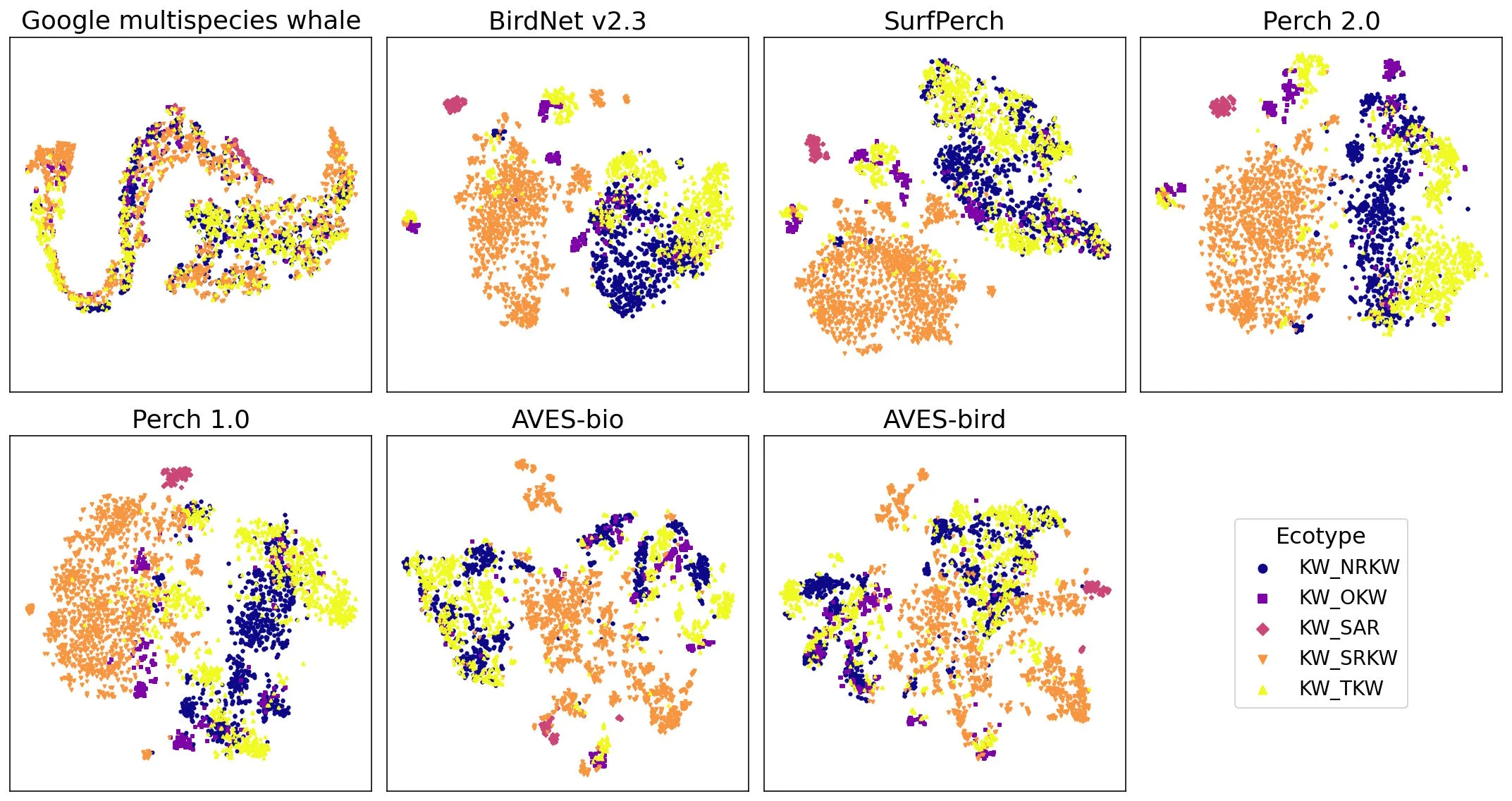

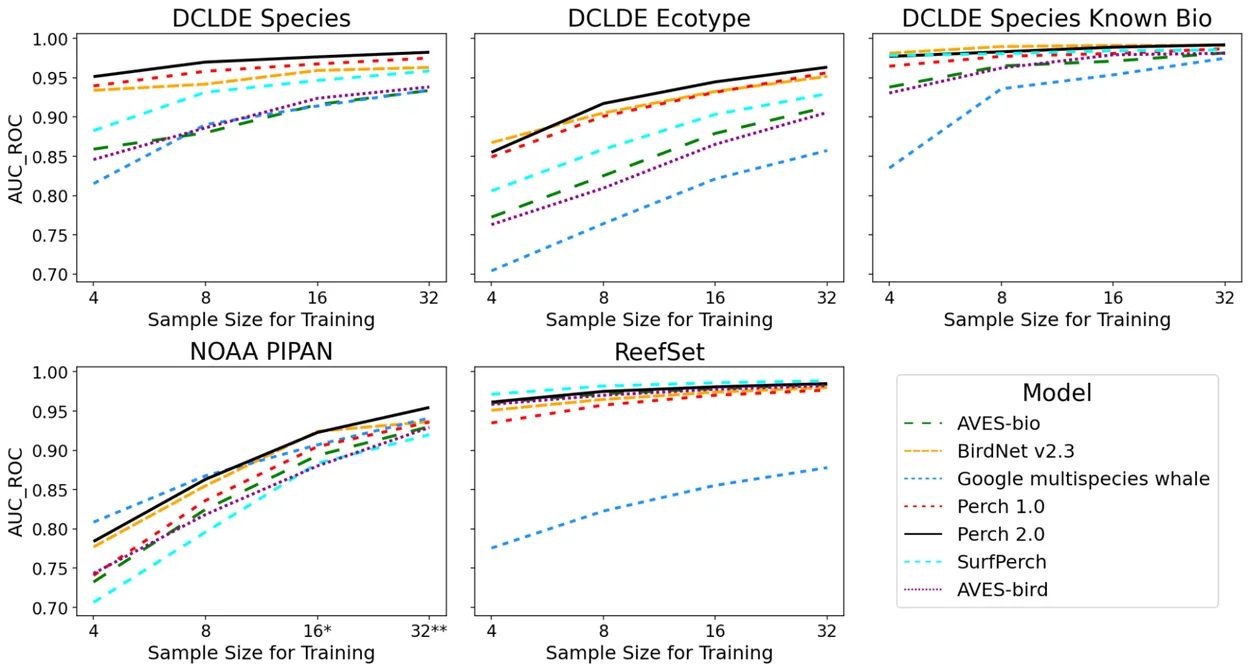

To test how well the bird-trained model performs underwater, the researchers evaluated it on three marine datasets: one containing baleen whale species from the Pacific (NOAA PIPAN), another featuring reef sounds such as crackling and grunting (ReefSet), and a third with more than 200,000 annotated orca and humpback whale calls (DCLDE 2026).

The model generates a compact numerical representation — an embedding — for each recording. Using these embeddings, a simple classifier is trained with only a small number of labeled examples to assign sounds to the correct species.

Specialized whale AI performs worse

The researchers compared Perch 2.0 with six other models, including Google’s Multispecies Whale Model (GMWM), which was trained specifically on whales. Performance was measured using the AUC-ROC metric, where 1.0 represents perfect discrimination.

Perch 2.0 ranks as the best or second-best model on nearly every task. When distinguishing between different orca subpopulations based on vocalizations, it achieves an AUC-ROC of 0.945, compared with 0.821 for the whale-specific model. For general underwater sound classification, Perch 2.0 reaches 0.977, while GMWM scores 0.914 — using just 16 training examples per category in both cases.

The difference becomes even more pronounced when GMWM is used directly as a classifier rather than for transfer learning: its performance drops to 0.612. The researchers suggest the model may have overfitted to specific microphones or other properties of its training data. Overall, specialization to a narrow domain appears to reduce generalization capability.

The “Bittern Lesson” of bioacoustics

The authors propose three explanations for the surprising cross-domain transfer.

First, neural scaling laws apply: larger models trained on more data generalize better, even to tasks outside their original domain.

Second, they introduce the so-called “Bittern Lesson” — a play on the bird species “bittern” and the well-known “Bitter Lesson.” Bird classification is particularly challenging because differences between species are often extremely subtle. In North America alone, there are 14 species of doves with slightly different cooing patterns. A model capable of reliably distinguishing such fine-grained differences learns acoustic features that transfer well to entirely different tasks.

Third, there is an evolutionary explanation. Birds and marine mammals independently evolved similar sound-production mechanisms, known as the myoelastic-aerodynamic mechanism. This shared physical foundation may explain why acoustic features transfer so effectively between these animal groups.

Rapid classifiers for new discoveries

The practical significance lies in what the researchers call “agile modeling.” Passive acoustic data can be embedded into a vector database, and linear classifiers trained on precomputed embeddings can be created within hours. This is crucial in marine bioacoustics, where new sound types are discovered regularly. For example, the mysterious “biotwang” sound was only later attributed to Bryde’s whales.

Google has released an end-to-end tutorial on Google Colab and made the tools available on GitHub

ES

ES  EN

EN