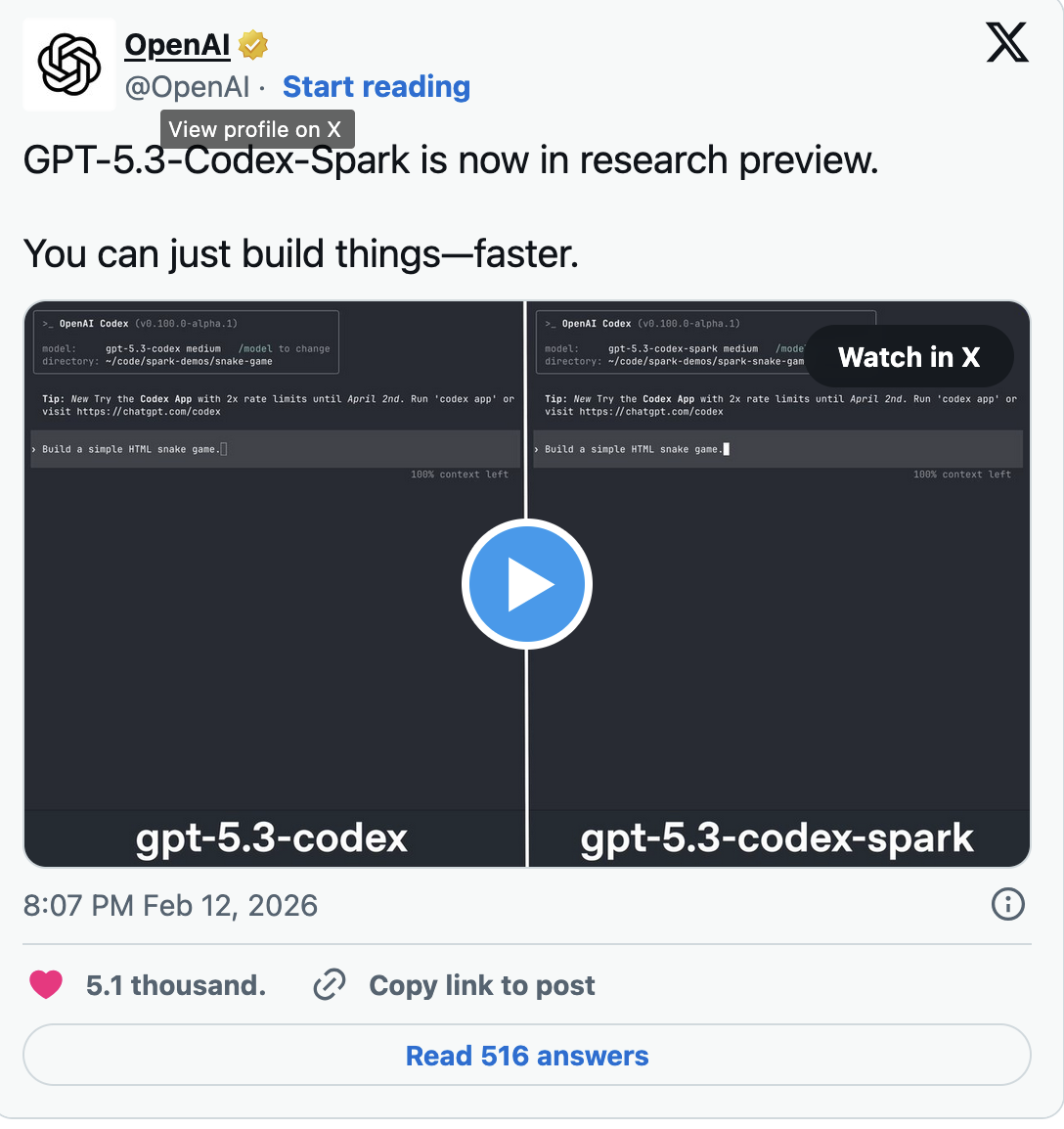

GPT-5.3-Codex-Spark is positioned as a scaled-down variant of the GPT-5.3 Codex model unveiled in February and is optimized for faster inference. To achieve this, the startup is using a specialized chip from its hardware partner Cerebras.

The two companies announced their partnership in January.

“Integrating Cerebras into our compute stack is aimed at making AI responses significantly faster,” OpenAI said at the time.

The company describes the Spark chip as the “first milestone” of the partnership.

The processor is designed for high-speed, real-time operation and runs on Cerebras’ Wafer Scale Engine 3, the third generation of the company’s wafer-scale megachips, which feature four trillion transistors.

OpenAI characterizes the new tool as a “daily productivity driver” that supports rapid prototyping, while the original GPT-5.3 Codex is intended for longer and more resource-intensive tasks.

In its official statement, OpenAI emphasized that Spark was engineered to deliver the lowest possible latency in Codex.

“Codex-Spark is the first step toward Codex operating in two complementary modes: real-time collaboration for fast iteration and extended tasks that require deep reasoning,” the company said.

ES

ES  EN

EN